Optimizing PostgreSQL and the operating system for a heavy write workload involves careful tuning of both PostgreSQL settings and OS configurations. Here’s a concise guide to the best configurations:

PostgreSQL Configuration

- Memory Settings:

shared_buffers: Set 25-40% of total RAM. Purpose It stores frequently accessed data and index pages to reduce the need for disk I/O, thereby improving performance.work_mem: Set 16MB to 64MB (higher for complex queries). Each time a query is executed, PostgreSQL can usework_memfor operations like sorting and hashing. If the memory required for these operations exceeds thework_memsetting, PostgreSQL will spill data to disk, which can significantly degrade performance.maintenance_work_mem: Set 256MB to 1GB for maintenance operations. For heavy write operations like VACUUM or CREATE INDEX, consider setting this to a few hundred MB. This setting allows PostgreSQL to perform maintenance tasks more efficiently by using more memory for these operations, reducing the need for disk I/O and speeding up the execution time of tasks like index creation and table vacuuming.

- Write-Ahead Logging (WAL):

wal_level: Set tominimalif replication is not needed. It controls the level of detail in the WAL, which affects the ability to support replication and point-in-time recovery (PITR). Set tominimalif replication is not needed.synchronous_commit: Set tooffto improve write performance (some data loss possible on crash). This setting dictates whether a transaction must wait for the WAL to be flushed to disk before the transaction is considered committed.commit_delay: Set to 10-20 ms to allow batching of commits. It specifies a delay (in microseconds) that PostgreSQL will wait before flushing the WAL (Write-Ahead Log) to disk after a commit operation. This can allow multiple transactions to be batched together, potentially improving efficiency.wal_buffers: Increase to 16MB or more, depending on workload. Purpose for provides a buffer area for WAL data to be stored in memory temporarily. This helps reduce the frequency of disk writes, improving performance during heavy write operations.

- Checkpoints:

checkpoint_timeout: Increase to 15-30 minutes. This setting defines the maximum time interval (in seconds) between two consecutive checkpoints. A checkpoint is crucial for database recovery and ensures that data is safely written to disk.max_wal_size: Increase to 2GB or more. This setting controls the maximum amount of WAL data that can be generated before PostgreSQL initiates a checkpoint. A largermax_wal_sizeallows more WAL data to accumulate, which can lead to fewer checkpoints and potentially improve performance during heavy write operations.checkpoint_completion_target: Set to 0.7 or higher. It defines the fraction of thecheckpoint_timeoutthat PostgreSQL aims to use for the checkpoint process. A higher value indicates that PostgreSQL will spread the I/O generated by the checkpoint over a longer period, reducing I/O spikes.

- Autovacuum:

- Ensure it runs effectively. Adjust:

autovacuum_vacuum_cost_limit: Increase to 2000 or more. This setting helps regulate the resource usage of the autovacuum process by limiting how much I/O it can perform during each run. This is important for balancing database maintenance with the performance of normal database operations.autovacuum_naptime: Set to 1 minute. This setting controls the frequency of autovacuum operations, impacting how quickly the database can reclaim space and update statistics for query planning.

- Ensure it runs effectively. Adjust:

- Connection Settings:

max_connections: Set according to your application needs, but monitor RAM usage. This setting limits the number of simultaneous client connections to the database. It includes connections from applications, users, and background processes.

- Other Important Parameters:

effective_cache_size: Set to about 75% of total RAM. It gives the query planner an estimate of how much memory is available for the database to cache disk pages, including both the operating system’s file system cache and PostgreSQL’s own shared buffers.default_statistics_target: Set to 100 for better query planning. This setting specifies the number of sample values that PostgreSQL will collect for each column when gathering statistics. Higher values lead to more detailed statistics, which can improve the accuracy of query planning and execution.Max_files_per_process: used to control the maximum number of file descriptors that can be opened by a single PostgreSQL backend process. The default value formax_files_per_processis usually set to 1000, but this can vary based on the operating system and PostgreSQL version

Operating System Configuration

- Filesystem:

- Use XFS or EXT4 with the

noatimemount option to reduce write overhead.

- Use XFS or EXT4 with the

- I/O Scheduler:

- Use the

deadlineornoopI/O scheduler for improved performance under heavy write loads.

- Use the

- Swappiness:

- Set

vm.swappinessto a low value (e.g., 10) to minimize swapping.

- Set

- Disk Configuration:

- Use SSDs for data directories and WAL for lower latency.

- Consider RAID 10 for redundancy and performance.

- Kernel Parameters:

- Adjust

fs.file-maxandfs.nr_opento accommodate more open files. - Example:

echo "fs.file-max = 100000" >> /etc/sysctl.conf

sysctl -p

- Adjust

- Memory Management:

- Ensure adequate RAM and adjust limits (

ulimit) appropriately.

- Ensure adequate RAM and adjust limits (

- Networking:

- Optimize network settings if using replication or high traffic.

- Open Files

- Set 65536.

You can set the limits in the/etc/security/limits.conffile:postgres soft nofile 65536postgres hard nofile 65536You may also need to adjust the system-wide limit in

/etc/sysctl.confor with theulimitcommand.To check the current limits for open files, you can use:

ulimit– nOpen files refer to any files that are currently in use by the PostgreSQL server, including data files, WAL (Write-Ahead Logging) files, configuration files, and temporary files. Each connection to the database also consumes a file descriptor

- Set 65536.

Monitoring and Tuning

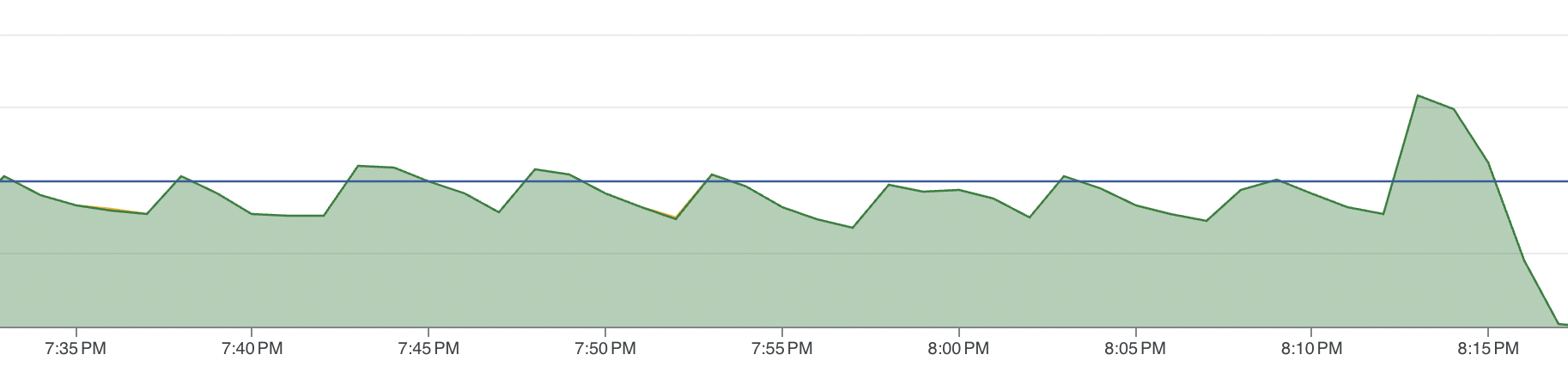

- Use

pg_stat_activity,pg_stat_statements, and tools liketop,iostat, orvmstatfor monitoring. - Benchmark using

pgbenchor similar tools to simulate workloads and adjust configurations based on results. - Use PMM for dashboard monitoring database.

Conclusion

These configurations provide a solid starting point for optimizing PostgreSQL and the OS for heavy write workloads. Always monitor performance and adjust settings based on your specific workload characteristics and system resources.